This site is under contruction, and for internal testing purposes only. Please contact Jamie (schiel@mit.edu) with any questions.

Build your own software-defined 3D camera

This site is under contruction, and for internal testing purposes only. Please contact Jamie (schiel@mit.edu) with any questions.

Nanophotography is a new modular camera architecture that gives total design freedom to the user. Existing commerical time-of-flight (ToF) systems (e.g., Microsoft Kinect) have hard-coded sampling structures that limit potential avenues of research. Our "software-defined" camera was devised to enable exploration of unconventional sampling methods and applications.

We're a team of researchers at the MIT Media Lab. This project began as a collaboartion with the Universtiy of Waikato in New Zealand, and was initially presented in a SIGGRAPH course. Since then, our evolving camera designs have led to many contributions in transient imaging research, and we're excited to empower other researchers with our open-source kit.

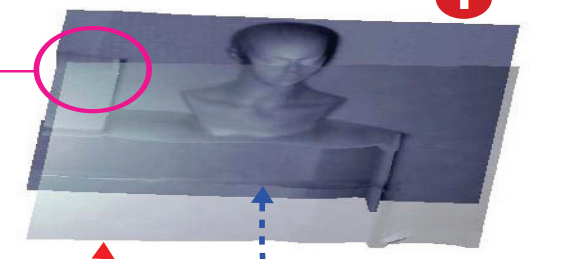

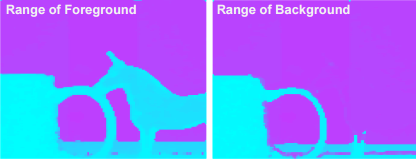

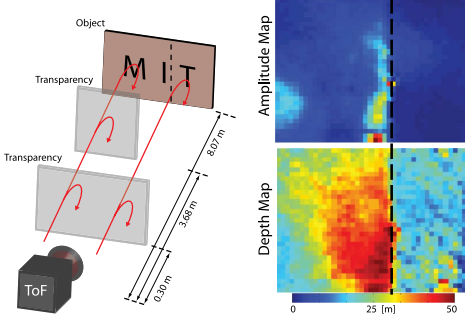

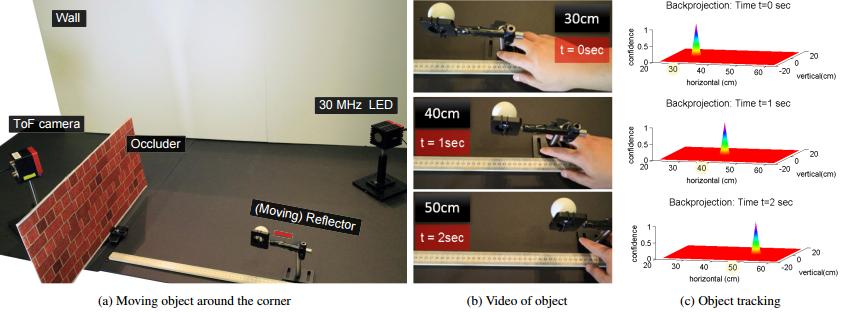

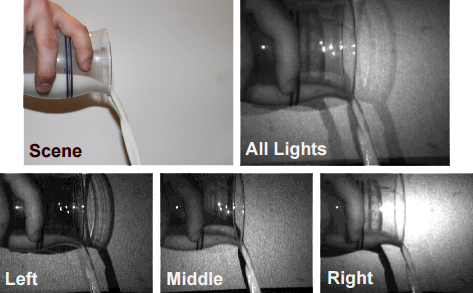

At its fundamental level, Nanophotogrpahy is a depth camera that can be used to acquire 3d information from a scene. However, becasuse of the creative freedom of the adapatable sampling architecture, Nanophotography can do many things that conventional 3D cameras cannot. Two powerful examples are ultra-fast "light-sweep" videos that visualize the propagation of light through a scene, and reconstruction of semi-transparent objects.

Many 3D systems are straightforward. Laser scanners, for instance, ping the scene with a pulse of light and measure the time it takes for the pulse to come back (this is also how LIDAR police scanners work). In Nanophotography, we embed a special code into the ping, so when the light comes back we obtain additional information beyond time of arrival.

The name comes from the light source that is used. In femtophotography a femtosecond pulse of light is sent to the scene. In nanophotography a periodic signal with a period in the nanosecond range is fired at the scene. By using a demodulation scheme, we are able to perform sub-nanosecond time profile imaging.

To acquire the light sweep capture on a large scene, the total acquisition takes 4 seconds using research prototype hardware. Compare with a few hours for femtophotography, and 6 hours (including scene calibration) using the UBC method. We expect the acquisition to take a fraction of a second by exploiting two key factors: (i) incremental advances in new generations of hardware technology, and (ii) a tailored ASIC or on-chip FPGA implementation.

A minor modification to commercial technology, such as Microsoft Kinect is all that is needed. In particular, the FPGA or ASIC circuit needs to be reconfigured to send custom binary sequences to the illumination and lock-in sensor. This needs to be combined with the software to perform the inversion. In general, time of flight cameras are improving in almost every dimension including cost, spatial resolution, time resolution, and form factor.

Embedding custom codes, as we do, has pros and cons. The major cons include increased computation and ill-posed inverse problems. The former we can expect to be addressed by improvements in computational power and the latter we overcome by using sparse priors. This research prototype might influence a trend away from sinusoidal AMCW, which has been the de facto model in many systems beyond time of flight. More work is needed to make this production quality, but the early outlook is promising.

Resolving multipath interference in time-of-flight imaging via modulation frequency diversity and sparse regularization, A. Bhandari, et al., Optics Letters, 2014 - PDF

Occluded Imaging with Time of Flight Sensors, A. Kadambi, et al., In preparation - PDF

Super-Resolution in Phase Space, A. Bhandari, et al., ICASSP, 2015 - PDF

Coded Time-of-Flight Imaging for Calibration Free Fluorescence Lifetime Estimation, A. Bhandari, et al., OSA Imaging Science and Applications, 2014 - PDF

Phasor Imaging: A Generalization of Correlation-Based Time-of-Flight Imaging, M. Gupta, et al., ACM Transactions on Graphics, 2015 - PDF | Website

Locating and Classifying Fluorescent Tags Behind Turbid Layers Using Time-Resolved Inversion, G. Satat, et al., Nature Communications, 2015 - PDF | Website

Temporal Frequency Probing for 5D Transient Analysis of Global Light Transport, M. O'Toole, et al., ACM Transactions on Graphics, 2014 - PDF | Website

Diffuse Mirrors: ''Looking Around Corners'' Using Inexpensive Time-of-Flight Sensors, F. Heide, et al., CVPR, 2014 - PDF | Website

Imaging in scattering media using correlation image sensors and sparse convolutional coding, F. Heide, et al., Optics Express, 2014 - PDF | Website

Fourier Analysis on Transient Imaging with a Multifrequency Time-of-Flight Camera, J. Lin, et al., CVPR, 2014 - PDF | Website

Low-budget Transient Imaging using Photonic Mixer Devices, F. Heide, et al., ACM Transactions on Graphics, 2013 - Website

Femto-photography: Capturing and visualizing the propagation of light, A. Velten, et al., ACM Transactions on Graphics, 2013 - Website

CORNAR: Looking Around Corners using Femto-Photography, A. Velten, et al., Nature Communications 3 (2012): 745 - Website

Single View Reflectance Capture using Multiplexed Scattering and Time-of-flight Imaging, N. Naik, et al., ACM Transactions on Graphics, 2011 - PDF | Website

Multiple frequency range imaging to remove measurement ambiguity, A.D. Payne, et al., Optical 3D Measurement Techniques, 2009 - PDF | Website

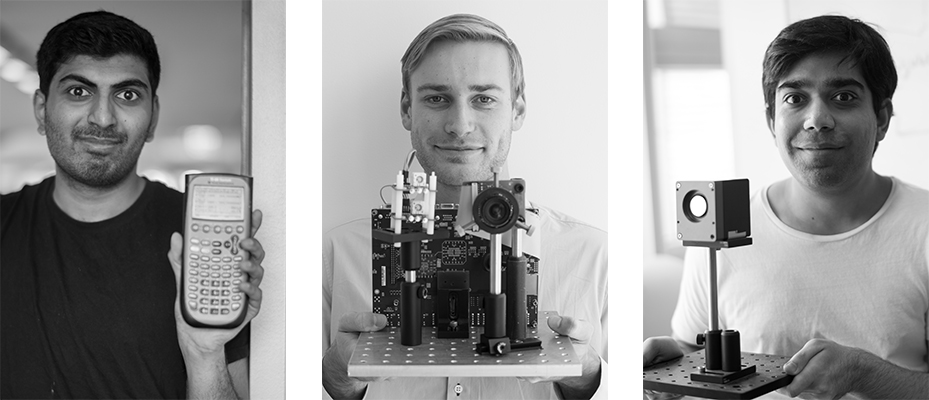

We're a group of researchers in the Camera Culture group at the MIT Media Lab, superivsed by Professor Ramesh Raskar. Feel free to contact us about our research. Specific technical questions about the DIY guide on this website should go to Jamie.

Achuta Kadambi (left), achoo@mit.edu

Jamie Schiel (middle), schiel@mit.edu

Ayush Bhandari (right), ayush@mit.edu

Ramesh Raskar, raskar@mit.edu

Engadget MIT's $500 Kinect-like camera works in snow, rain, gloom of night

MIT News Office Inexpensive 'nano-camera' can operate at the speed of light

New Scientist Camera that sees through fog could make driving safer

GigaOM MIT camera could help avoid collisions, boost medical imaging

The Financial Express Now, 'nano-camera' that operates at the speed of light

Softpedia MIT Motion Sensing Camera Can Handle Translucent Objects

CNN Money MIT camera could help avoid collisions, boost medical imaging

Gizmodo MIT's New $500 Kinect-Like Camera Even Works with Translucent Objects.

Huffington Post 'Speed of Light' Camera can take 3D Pictures for $500

Rafael Whyte, MIT & University of Waikato

Adrian Dorrington, University of Waikato

Lee Streeter, University of Waikato

Diony Rosa, MIT

Christopher Barsi, MIT

Hang Zhao, MIT

Boxin Shi, MIT

Lucas Lima, MIT

Vijay Sadashivaiah, MIT

Please contact Jamie Schiel for fabrication questions: schiel@mit.edu